[ad_1]

It’s no exaggeration to say that extendable sensors could change the way soft robots work and feel. In fact, they will be able to try quite a lot.

Cornell researchers have created a fiber-optic sensor that combines low-cost LEDs and dyes, resulting in a stretchable “skin” that detects deformations such as pressure, flexion and tension. This sensor could give soft robotic systems – and anyone who uses augmented reality technology – the ability to experience the same rich, tactile sensations that mammals depend on to navigate the natural world.

The researchers, led by Rob Shepherd, associate professor of mechanical and aerospace engineering at the College of Engineering, are working to commercialize the technology for physical therapy and sports medicine.

Their article, “Stretchable Distributed Fiber-Optic Sensors,” was published on November 13 in Science. The authors of the paper are Ph.D. student Hedan Bai ’16 and Shuo Li, Ph.D. ’20.

The design builds on an earlier extensible sensor, created in Shepherd’s Organic Robotics Lab in 2016, in which light was sent through an optical waveguide and a photodiode detected changes in beam intensity to determine when the material has been deformed. The lab has since developed a variety of similar sensory materials, such as optical lace and foams.

For the new project, Bai drew inspiration from silica-based distributed fiber optic sensors, which detect small changes in wavelength as a way to identify multiple properties, such as changes in humidity, temperature and deformation. However, silica fibers are not compatible with soft and elastic electronics. Intelligent soft systems also present their own structural challenges.

“We know that soft matter can be deformed in a very complicated and combined way, and there are many deformations occurring at the same time,” Bai said. “We wanted a sensor that could decouple them.”

Bai’s solution was to make an extendable light guide for multimode sensing (SLIMS). This long tube contains a pair of polyurethane elastomeric cores. A core is transparent; the other is filled with absorbent dyes in multiple locations and connects to an LED. Each core is coupled to a red-green-blue sensor chip to record geometric changes in the optical path of light.

The dual-core design increases the number of outputs with which the sensor can detect a range of deformations – pressure, bending or elongation – by igniting dyes, which act as spatial encoders. Bai paired that technology with a mathematical model that could decouple, or separate, the different deformations and pinpoint their exact positions and magnitudes.

While distributed fiber optic sensors require high resolution sensing equipment, SLIMS sensors can work with small optoelectronic components that have lower resolution. This makes them cheaper, simpler to manufacture and more easily integrated into small systems. For example, a SLIMS sensor could be incorporated into a robot’s hand to detect slippage.

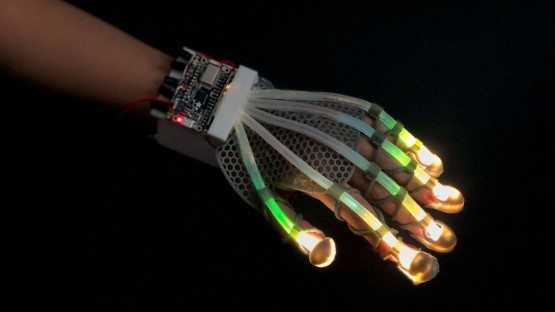

The technology is also wearable. The researchers designed a 3D printed glove with a SLIMS sensor that runs along each finger. The glove is powered by a lithium battery and equipped with Bluetooth so that data can be transmitted to the basic software, designed by Bai, which reconstructs the movements and deformations of the glove in real time.

“Right now, the detection is mostly done by vision,” Shepherd said. “We hardly ever measure touch in real life. This skin is a way to allow ourselves and machines to measure touch interactions in a way we currently use our phones’ cameras. Use your sight to measure touch. This is the most convenient and practical way to do it in a scalable way. “

Bai explored the commercial potential of SLIMS through the National Science Foundation Innovation Corps (I-Corps) program. She and Shepherd are working with Cornell’s Center for Technology Licensing to patent the technology, with a focus on applications in physical therapy and sports medicine. Both camps have taken advantage of motion tracking technology, but until now they have not had the ability to capture force interactions.

Researchers are also looking at ways that SLIMS sensors can enhance virtual and augmented reality experiences.

“The immersion in VR and AR is based on motion capture. Touch is barely there, “Shepherd said.” Let’s say you want to have an augmented reality simulation that teaches you how to fix your car or change a tire. If you had a glove or something that could measure pressure, as well as movement, the augmented reality display might say, “Turn around and then stop so you don’t over tighten the nuts.” There is nothing out there that does it right now, but this is one way to do it. “

Co-authors include Clifford Pollock, Ilda, and Charles Lee Professor of Engineering; doctoral student Jose Barreiros, MS ’20, M.Eng. ’17; and Yaqi Tu, MS ’18.

The research was supported by the National Science Foundation (NSF); the Air Force Office of Scientific Research; Cornell technology acceleration and maturation; National Institute of Food and Agriculture of the United States Department of Agriculture; and the Naval Research Bureau.

The researchers used the Cornell NanoScale Science and Technology Facility and the Cornell Center for Materials Research, both supported by NSF.

Source link