[ad_1]

Borrowing a page from high-energy physics and astronomy textbooks, a team of physicists and computer scientists at the U.S. Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) adapted and successfully applied a common technique error reduction in the field of quantum computing.

In the world of subatomic particles and giant particle detectors, distant galaxies and giant telescopes, scientists have learned to live and work with uncertainty. They often try to extract ultra-rare particle interactions from a huge tangle of other particle interactions and background “noise” that can complicate their hunt, or try to filter out the effects of atmospheric distortions and interstellar dust to improve resolution. of astronomical data. imaging.

Additionally, inherent problems with detectors, such as their ability to record all particle interactions or accurately measure particle energies, can result in the misreading of data from the electronics they are attached to, so scientists have to design complex filters. , in the form of computer algorithms, to reduce the margin of error and return the most accurate results.

The problems of noise and physical defects and the need for error correction and mitigation algorithms, which reduce the frequency and severity of errors, are also common in the nascent field of quantum computing and a study published in the journal npj Quantum information found that there also appear to be some common solutions.

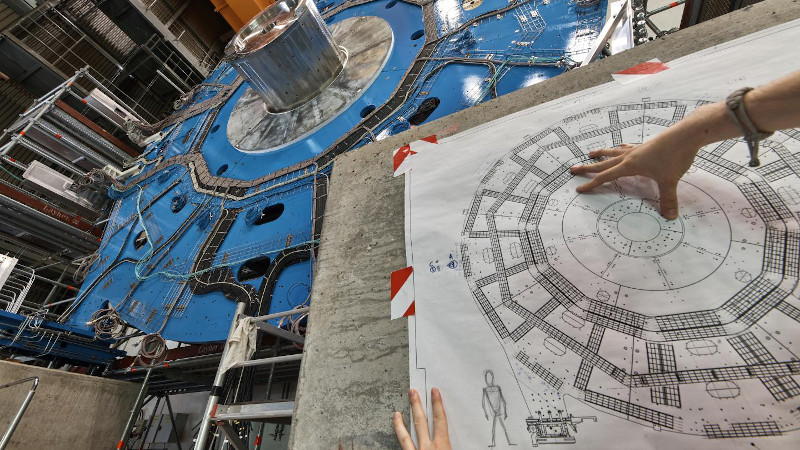

Ben Nachman, a Berkeley Lab physicist who is involved in particle physics experiments at CERN as a member of the Berkeley Lab’s ATLAS group, saw the connection of quantum computation while working on a particle physics computation with Christian Bauer, a physicist. Berkeley Lab theorist who is a co-author of the study. ATLAS is one of the four giant particle detectors of CERN’s Large Hadron Collider, the largest and most powerful particle collider in the world.

“In ATLAS, we often have to ‘uncover’ or correct the effects of the detector,” said Nachman, the lead author of the study. “People have been developing this technique for years.”

In experiments at the LHC, particles called protons collide at a rate of about 1 billion times per second. To cope with this incredibly fast-paced and “noisy” environment and the inherent problems with energy resolution and other factors associated with detectors, physicists use error correction “deployment” techniques and other filters to screen this mixture of particles in the most useful and accurate way. data.

“We realized that even current quantum computers are very noisy,” Nachman said, so finding a way to reduce this noise and minimize errors – error mitigation – is a key to advancing quantum computing. “One type of error is related to the actual operations you perform, and one is related to reading the state of the quantum computer,” he noted – that first type is known as a gate error and the second is called a read error.

The latest study focuses on a technique to reduce reading errors, called “iterative bayesian unfolding” (IBU), familiar to the high energy physicists community. The study compares the effectiveness of this approach with other error correction and mitigation techniques. The IBU method is based on Bayes’ theorem, which provides a mathematical way to find the probability of an event occurring when there are other conditions related to this event that are already known.

Nachman noted that this technique can be applied to the quantum analog of classical computers, known as quantum computers based on universal gates.

In quantum computing, which relies on quantum bits, or qubits, to carry information, the fragile state known as quantum superposition is difficult to maintain and can decay over time, causing a qubit to display a zero instead of one – this is a common example of a read error.

Superposition predicts that a quantum bit can represent a zero, one, or both quantities at the same time. This allows for unique processing capabilities not possible in conventional processing, which relies on bits representing either one or a zero, but not both at the same time. Another source of read error in quantum computers is simply an incorrect measurement of the state of a qubit due to the computer architecture.

In the study, the researchers simulated a quantum computer to compare the performance of three different error correction techniques (either error mitigation or explanation). They found that the IBU method is more robust in a very noisy and error-prone environment and slightly outperformed the other two in the presence of more common noise patterns. Its performance was compared to an error correction method called Ignis which is part of a collection of open source quantum processing software development tools developed for IBM quantum computers and a very simple form of deployment known as the inversion method. matrix.

The researchers used the simulated quantum computing environment to produce more than 1,000 pseudo-experiments and found that the results for the IBU method were closest to predictions. The noise models used for this analysis were measured on a 20 qubit quantum computer called the IBM Q Johannesburg.

“We took a very common technique from high-energy physics and applied it to quantum computing, and it worked really well, as it should,” Nachman said. There was a steep learning curve. “I had to learn all sorts of things about quantum computing to make sure I know how to translate it and how to implement it on a quantum computer.”

He said he was also very fortunate to find collaborators for the study with expertise in quantum computing at Berkeley Lab, including Bert de Jong, who leads a quantum algorithm team from the DOE’s office for advanced scientific research on computation and an accelerated research project for quantum computing at the Berkeley Computational Research Division of Lab.

“It is exciting to see how the plethora of knowledge that the high-energy physics community has developed to get the most out of noisy experiments can be used to get more out of noisy quantum computers,” de Jong said.

The simulated and real quantum computers used in the study ranged from five qubits to 20 qubits, and the technique is expected to be scalable to larger systems, Nachman said. But the error correction and mitigation techniques the researchers tested will require more computing resources as quantum computers grow in size, so Nachman said the team is focused on making the methods more manageable for quantum computers with array of larger qubits.

Nachman, Bauer, and de Jong also participated in an earlier study proposing a way to reduce gate errors, which is the other major source of quantum computation errors. They believe that error correction and error mitigation in quantum computing may ultimately require a mix and match approach, using a combination of several techniques.

“It’s an exciting time,” Nachman said, as the field of quantum computing is still young and there is a lot of room for innovation. “People have at least got the message about these kinds of approaches and there is still room for progress.” He noted that quantum computing provided a “push to think about problems in a new way”, adding, “It opened up new scientific potential.”

Source link